The Importance of Team Culture Alignment

Every team has a culture, whether you define it intentionally or let it form on its own. It can be magical when a team has aligned on culture from top to bottom. Understanding h...

So, You're a Manager Now

Transitioning from being an Individual Contributor (IC) to a Manager is one of the most difficult shifts any person may make in their career. This represents a recognition of gr...

The Middle Path of Planning and Reflection

Thinking about the future or past is an important practice, but we must be careful to not to take either too far. Buddhists call this the Middle Way or the Middle Path. Taking a...

Micromanagement and Trust

If you ask 100 employees if they like to be micromanaged 90 of them will say no, and the remaining 10 will walk away in disgust at you having the gall to ask the question. Micro...

On Giving Advice

A friend of mine, Arvind, writes a blog called “myotherramblings.” It’s a place where he can write about anything he’d like to. Recently he wrote a post on The Dark Side of Advi...

Emergency Preparedness During Coronavirus Frenzy

In the midst of Coronavirus frenzy I decided to bring up a blog post I’ve been slowly writing for some time now. I am what I would consider a tentative “prepper” I like to be pr...

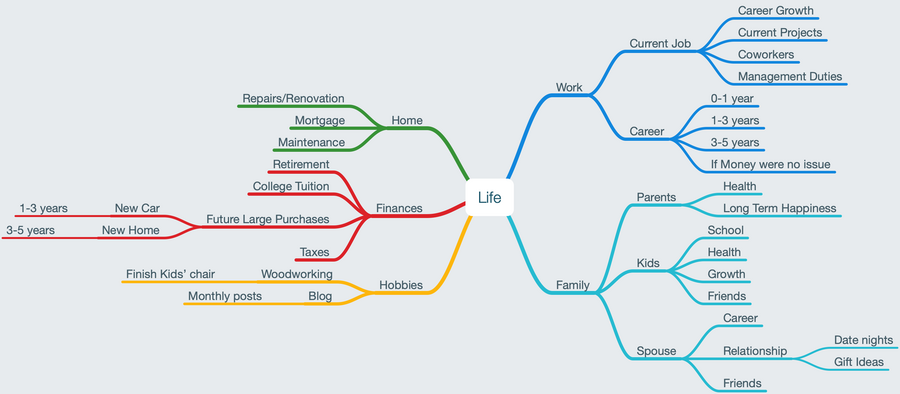

Mind Map Your Life

Recently there have been a lot of things going on in my life. So many that I started to find it difficult to keep track of everything. I needed a way to organize my thoughts so ...

Start With the Hard Part

I like Seth Godin’s blog I recommend you check it out. He writes daily, so it can be overwhelming, but there are really some good nuggets in there.

Delight in the Details

In trying to understand something we can often fall into a pattern of mapping the new thing to something we already know. This is a great way to get a foundation for understandi...